SDFNet - Boring 2D Flat images to fast-rendered 3D

SDFNet aims to go from boring flat, 2D images to less boring Signed Distance Functions for representing them in 3D, for simple, symmetric shapes. It uses Neural Radiance Fields (NeRFs) and Constructive Solid Geometry to go from images to 3D objects, to SDFs for the shapes.

- The report for the work done on this project in Sem 1 [AY 2022-23] can be found here.

- All other deliverables can be found here.

We’re deeply referencing the code accompanying the paper: CSGNet: Neural Shape Parser for Constructive Solid Geometry, CVPR 2018. Also referenced is the paper on Neural Radiance Fields, whose code can be found here.

Week - 1

- Discussed and formulated a brief plan of action and direction we would like the project to head to.

- Concluded on working initially towards two directions-

- 3D reconstruction of objects from their 2D images

- Improved implementation of Neural Rendering.

Week - 2

- Identified and collected previous research work like CSGNet and Pixel2Mesh in the domains.

- Formulated the Problem Statement - Improving CSGNet by adding more shape information, introducing color reconstruction, and creating a 2D image to 3D mesh pipeline.

- Began the Iteration One of reconstruction of the 3D model of a bottle. This was integral in helping us understand the nitty gritty of and the subtle details that were missed in mere discussions and research overview.

- Continued research work on the papers of Neural Rendering and Constructive Solid Geometry.

Week - 3

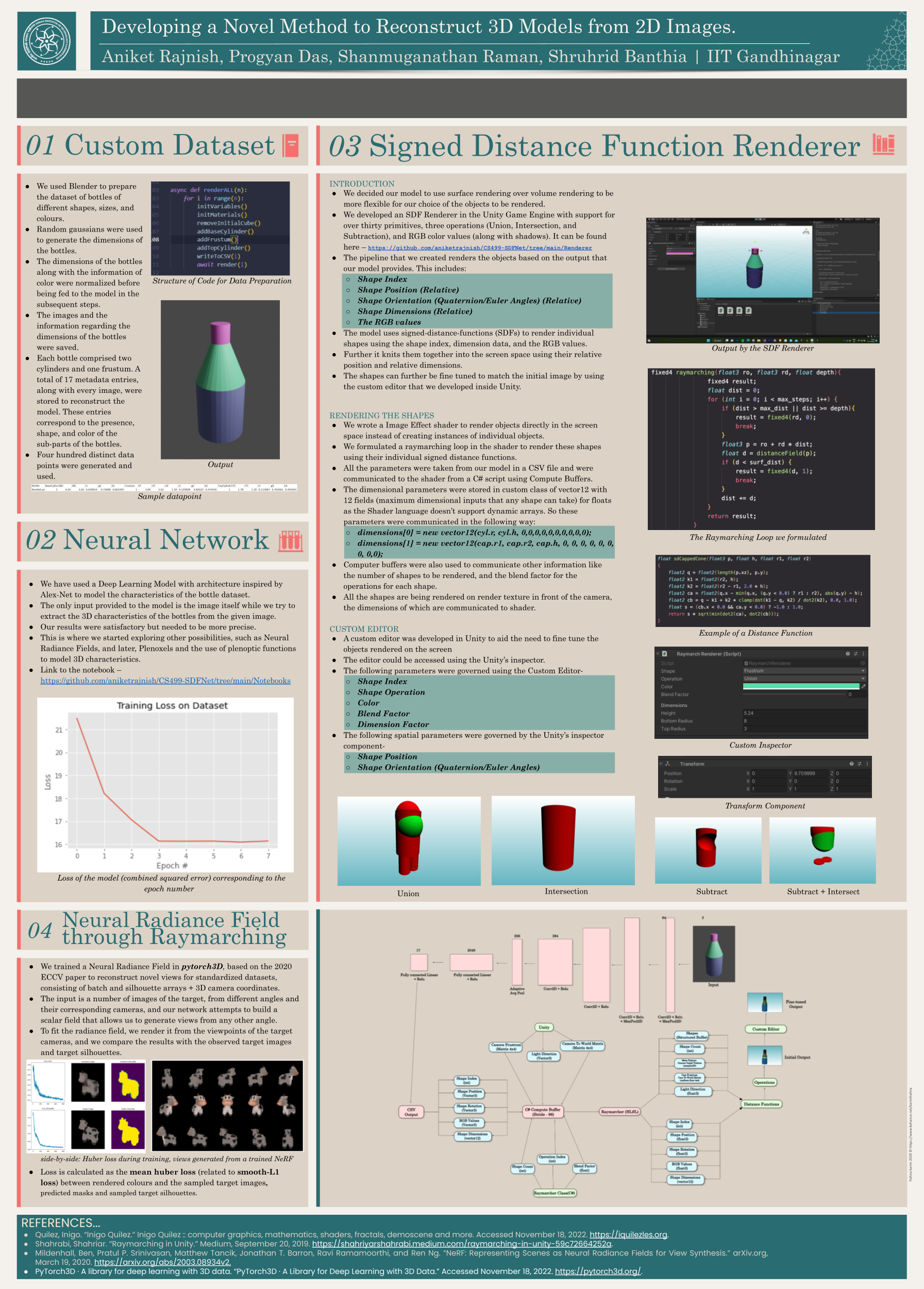

- Created a dataset using blender, developed the model to predict the required dimensions from a 2D image. As the first iteration, although the results being sub-par (with a MSE ~16), it helped us identify the next direction to move forward, ie, we needed some method to extract the 3D information from the images before feeding it to the Neural Network.

- Created a repo to collaborate.

- We began analyzing papers such as Pixel2Mesh for the above problem.

- Continued reseach work on the papers of Neural Rendering.

Week - 4

- Implemented a SDF Renderer (built ground-up in Unity) to reconstruct images from the data provided by the first iteration model.

- Identified new directions to progress, one being Pixel to Mesh followed by reconstruction using CSGNet, while also looking to explore the possibility of using the function within Neural Radiance Field for 3D reconstruction.

Blender Dataset

Rendered using the SDF Renderer

- We stopped updating blogs for past couple of months, but we’ll update them soon. Meanwhile you can find the report containing details of all the work done here.

- Also, this is poster for the same:

Contact

To ask questions, please email any one of us: Aniket, Dhyey, Progyan and Shruhrid.